The International Individual Tree Delineation (ITD) Contest, 2025

1. Background

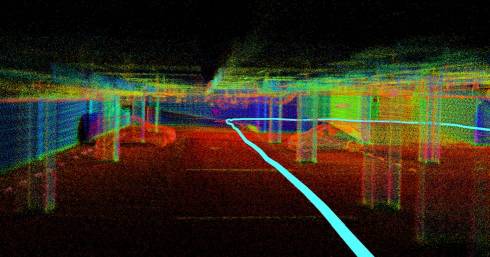

Individual trees, as the dominant and fundamental component of forests, play a crucial role in regulating the biophysical processes of forest ecosystems, such as radiation, vapor concentration, and temperature. Understanding individual-tree characteristics supports fine-scale forest management and protection. Light detection and ranging (LiDAR), also known as laser scanning (LS), provides a powerful solution for characterizing and understanding forest structure and function. Therefore, three-dimensional (3D) individual tree delineation (ITD) through LiDAR data has become a fundamental step toward extracting detailed tree-scale 3D structural traits.

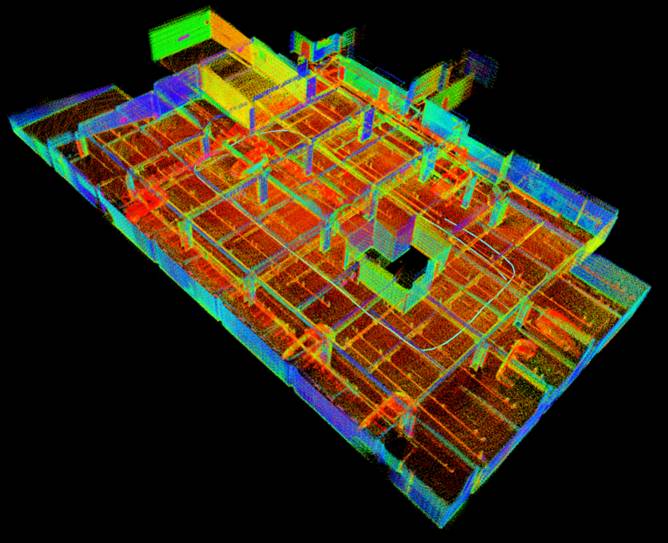

Figure 1. Individual tree delineation through point clouds supports fine-grained tree-level traits extraction at both individual- and plot-levels (Liang et al., 2024).

2. Contest overview

The International Individual Tree Delineation (ITD) Contest 2025 is the second event in this series, following the first ITD contest held in 2014 (details in (Liang et al., 2018). The contest aims to benchmark and advance the state-of-the-art (SOTA) in 3D ITD using close-range LiDAR data, by evaluating and comparing a wide range of conventional machine learning (ML) and deep learning (DL) approaches. The international ITD contest 2025 is organized with the support of the International Society for Photogrammetry and Remote Sensing (ISPRS).

Participants are encouraged to use the dataset provided by the contest to develop their own ITD methods and submit their inference results. The organizers will evaluate all submitted results and benchmark the methods using standardized procedures and metrics. The contest outcomes will be analyzed and reported to showcase the progress achieved over the past decade, identify remaining challenges, and outline future directions for development. The top six teams will be invited to the award ceremony (time and location to be confirmed) and will receive travel grants of up to USD 3, 000.

Please send following information by email to itdcontest.mspace@gmail.com for the contest link to participate, including:

- Team's name and members list (maximum 5, and list in order);

- Detailed information of each member, i.e., real name, email, affiliation, and position;

- Indicate a member for communication.

3. Task

The Contest encourages participants to develop automated ITD methods using either DL or ML approaches. Multiple participants may form a team and share a single account on the online platform, with up to five members per team.

Ther contest is divided into three phases: development, testing, and results verification.

- Development phase: Participants may develop and refine their method using the datasets provided by the contest. Participation in this phase is optional for teams that have already developed their ITD method in previous studies.

- Testing phase: Participants refine their method using the testing set, generate the inference, and submit the final 3D delineation results through the online platform, along with the required materials sent by email to itdcontest.mspace@gmail.com, to receive their score and ranking.

- Results verification: the results of the top six teams will be verified, and the final list of winners will be announced after this phase.

All submissions from the development and testing phases will undergo quantitative evaluation using standardized procedures and metrics. The feedback, including scores and rankings, will be displayed on the leaderboard of the online platform.

More details about the tasks are available on the online platform.

4. Datasets

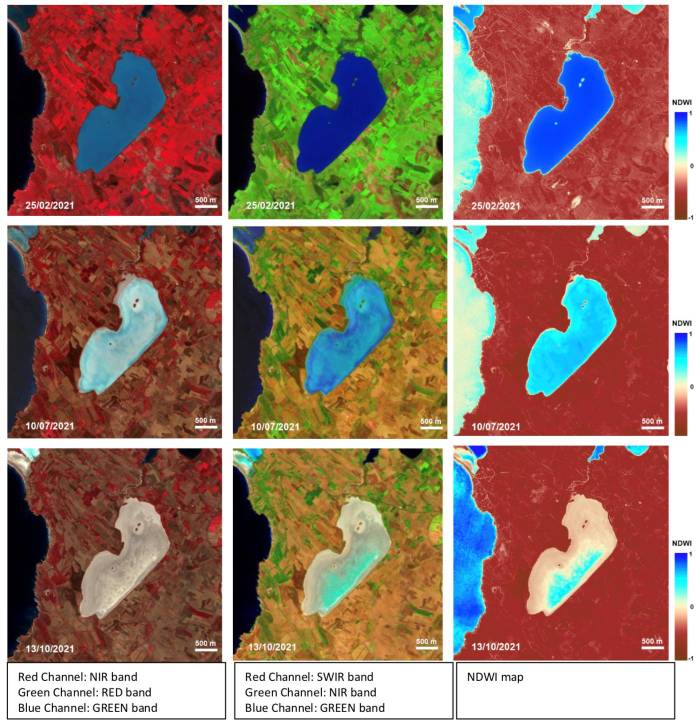

The ITD dataset provided by this contest consists of LiDAR point clouds collected from various forest and climate regions around the world using multiple platforms including, terrestrial (TLS), mobile (MLS), and unmanned aerial vehicle (ULS) systems. The dataset also includes tree-level annotations. Datasets are provided for participants to develop their ITD methods and to evaluate their performances.

The dataset includes point clouds both in their original resolution and in a resampled resolution of 5cm (3D). Due to the limitations of the online system, evaluations will be conducted using the 5cm resolution data. Namely, the participants can process the data using the original resolution and down sample the results to 5cm resolution, or alternatively, directly work on the provided 5cm resolution data.

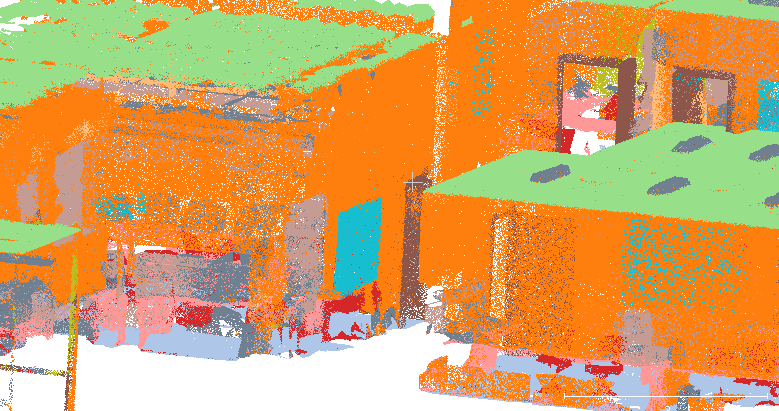

Figure 2. Examples of the dataset with rich diversity and tree-level annotation.

The datasets are dived into three different categories:

- Training data: to train or develop own ITD method, provided during the Phase 1 Method Development

- Validation data: to validate own ITD method, provided during the Phase 1 Method Development

- Testing data: to inference the ITD results using own method to obtain a valid score/rank, provided during the Phase 2 Method Testing

Additional details regarding the dataset, access, and usage terms are available on the online platform.

5. Examples

Two examples are provided, i.e., a set of sample codes of a DL-based ITD method for model development and an example result for result submission.

They can be found on GitHub.

6. Submission

Three submissions are required: the ITD results, a description document, and a Docker file.

The required submissions for each phase are as follows:

- Phase 1: Method Development

Participants can submit delineation outcomes for the provided training dataset, and receive evaluation feedbacks for multiple rounds. This allows the participants to iteratively improve their results based on the feedback throughout this phase. Participation in this phase is optional. - Phase 2: Method Testing

Participants will have one-week time to refine their ITD model using the provided testing set that are unseen during the development phase, and to generate and submit their results along with a description of the methods used, to achieve the final scores and ranking. Scores and rankings will be considered invalid if the description file is not submitted on time or lacks sufficient details. - Phase 3: Result Verification

The top six teams from the testing phase are required to submit additional files for validation. The organizers will execute the ITD models and verify their results. Minor discrepancies between the testing and re-executed results are acceptable, but significant differences are not. If a team in the original top-six list fails to fulfill these requirements, the next highest-ranking team that submits all required materials will replace it.

Further details about the submission requirements, including information and file formats, are available on the online platform. Please ensure all requirements are carefully followed to guarantee that the final scores and rankings are valid.

7. Evaluation

The accuracy of submitted ITD results for the training and testing datasets will be evaluated using standard metrics: precision, recall, and F1-score. Accuracy metrics will be displayed on the leaderboard, with the F1-score serving as the primary metric for ranking.

8. Schedule

- Contest opening (Oct. 1st, 2025): registration begins, and the training and validation sets are released.

- Development phase (Oct. 1st, 2025 → Jan. 17th, 2026): participants may submit the 3D ITC results for the validation set for multiple rounds and monitor their real-time ranking. The training set may be updated continuously during this phase.

- Testing phase (Jan. 18th, 2026 → Jan. 22nd, 2026): the testing set will be released on Jan. 18th, 2026. Participants must submit their ITD results of the testing datasets alone with a method description to obtain a valid score/rank.

- Verification phase (Jan. 19th, 2026 → Jan. 25th, 2026): the top six teams from the testing phase must submit required files for verification, including project code, Docker file, and ITD results.

- Winner determination (Jan. 26th, 2026 → Feb. 10th, 2026): organizers will verify the submitted files and determine the final winners.

- Winner announcement (Feb. 10th, 2026): the final top six teams will be announced following the result check

Note: Before official data usage permissions are announced on this website, the data provided exclusively by this Contest may not be used for any purpose outside this Contest such as applications or publications.

The contest organizers reserve the right to adjust the timetable, tasks, terms, and arrangement as necessary based on the contest’s progress.

Reference

Liang, X., Hyyppä, J., Kaartinen, H., Lehtomäki, M., Pyörälä, J., Pfeifer, N., Holopainen, M., Brolly, G., Francesco, P., Hackenberg, J., Huang, H., Jo, H.-W., Katoh, M., Liu, L., Mokroš, M., Morel, J., Olofsson, K., Poveda-Lopez, J., Trochta, J., Wang, D., Wang, J., Xi, Z., Yang, B., Zheng, G., Kankare, V., Luoma, V., Yu, X., Chen, L., Vastaranta, M., Saarinen, N., Wang, Y., 2018. International benchmarking of terrestrial laser scanning approaches for forest inventories. ISPRS Journal of Photogrammetry and Remote Sensing 144, 137–179. https://doi.org/10.1016/j.isprsjprs.2018.06.021

Liang, X., Qi, H., Deng, X., Chen, J., Cai, S., Zhang, Q., Wang, Y., Kukko, A., Hyyppä, J., 2024. ForestSemantic: a dataset for semantic learning of forest from close-range sensing. Geo-spatial Information Science 1–27. https://doi.org/10.1080/10095020.2024.2313325

WG III/1