Planned Benchmark Datasets

Multi-modal time series fusion and weakly-supervised continual learning for mapping deforestation

Most systems that monitor deforestation rely on costly semi-automated procedures that require manual annotation of large volumes of data. Although satellite image sequences are massively used for automatic land use and land cover mapping, the recent machine and deep learning approaches developed for these tasks are hardly applicable to forest monitoring due to the atmospheric noise and the high variability of landscapes.

This benchmark dataset will therefore focus on deforestation applications in Brazil by investigating two tasks in two different study areas. The objective is to foster new research in machine and deep learning for forest monitoring. More specifically, we will look at multi-modal time-series data fusion (Task 1) and weakly-supervised continual learning (Task 2).

Task1: The first task will consist in detecting deforested areas in the Roraima state, Brazil. In this area, the cloud coverage is very high, which prevents the sole use of optical images. Hence, the dataset will include multi-modal time-series data from Sentinel-1, Sentinel-2 and Planet satellites. The reference data will be gathered from PRODES, which provides an annual estimation of the deforestation rate in Brazil. PRODES produces annual maps of primary forests and deforested areas. The results of baseline models (e.g., temporal convolutional neural network or Transformers-based architectures) will be provided.

Task2: The second task will consist in detecting deforestation events in the Mato Grosso state, Brazil. For this task, only weak annotations extracted from DETER, an alert monitoring system, will be available. DETER provides the acquisition date at which a deforestation event has been visually identified by annotators on an optical satellite image. However, the actual deforestation event might have occurred a few days before this date. The objective of the task will be to retrieve the exact starting date of the deforestation event from a stream of daily Planet images.

Other datasets proposed by the WG members

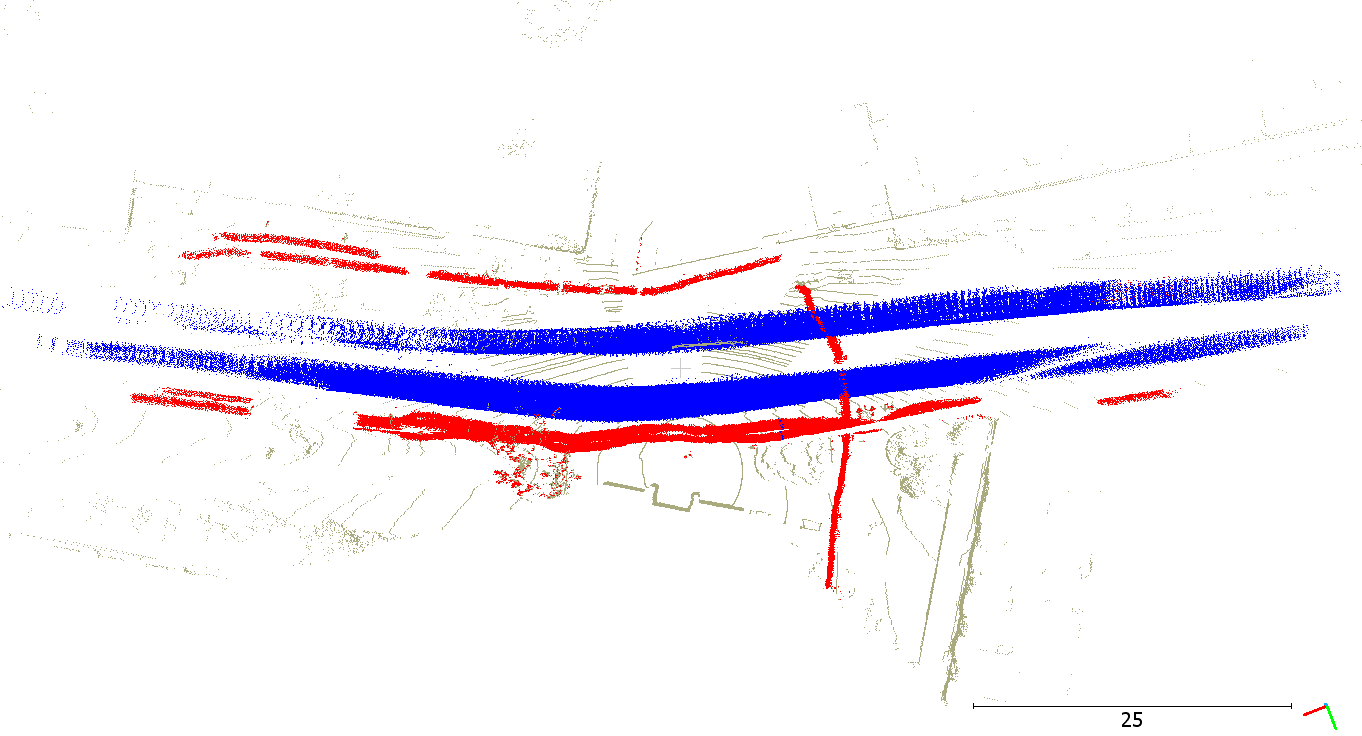

[released] Online Lidar segmentation

HelixNet is the largest dataset of point clouds time sequences (70% larger than KITTI). Each point is annotated with its semantic label and unique sensor information (sensor position and rotation, packet release time, etc) allowing for the precise measurements of the real-time readiness of semantic segmentation algorithms. The dataset will be presented in an ECCV2022 article, and has already been released on Zenodo in open access.

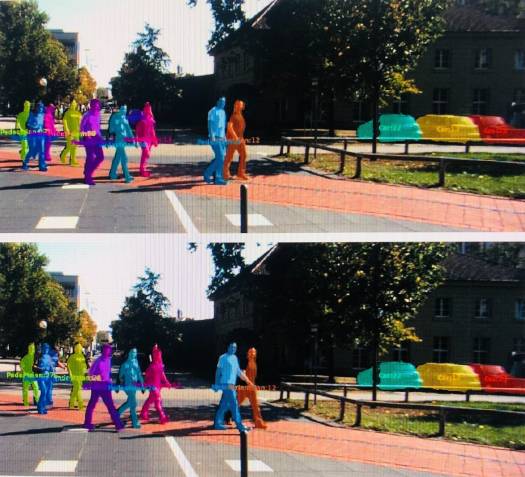

[planned] 3D moving pedestrian detection and tracking

Most current 3D multiple object tracking (MOT) benchmarks focus on traffic scenes (e.g. KITTI, NuScene), in which pedestrian movement is not well represented. In the meantime, pedestrian detection and tracking in a dense crowd have been heavily investigated from CCTV images/videos. In contrast to images, this benchmark will focus on people detection and tracking in dense crowds from 3D point clouds.

WG II/5