ISPRS WG II/3

3D Scene Reconstruction for Modeling & Mapping

Our Mission

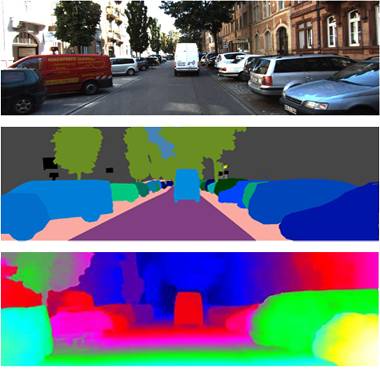

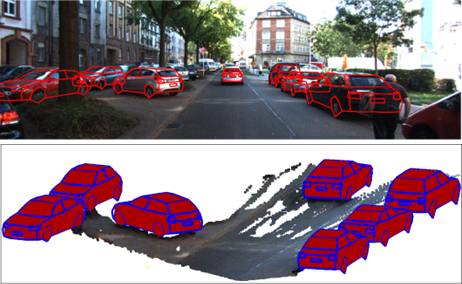

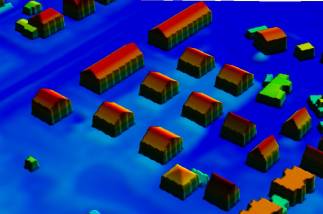

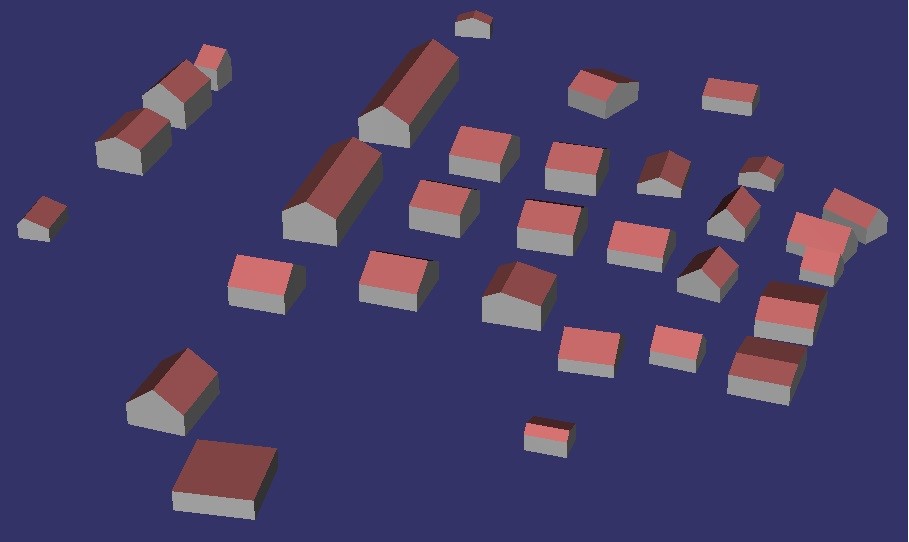

ISPRS Working Group II/3 aims to make progress in the automatic recognition and 3D reconstruction of objects in complex scenes from images, point clouds, and other sensor data. The emphasis is on scenes characterized by the occurrence of different object classes, e.g. urban scenes including vegetation, buildings, roads, street furniture, cars and pedestrians, or indoor scenes including furniture and different types of objects. One of the main applications of interest of our WG is the generation of high-resolution 3D City Models, but the scope of the WG also includes any form of object detection in complex environments, e.g. in the context of robotics or autonomous driving. It is also our goal to evaluate methods for object extraction and the suitability of different sensors for that task.

To this purpose, the WG organizes workshops to exchange the latest developments on object recognition and reconstruction, collaborating closely with other WGs both from TC II and from other TCs. Furthermore, the WG strives to organize at least one benchmark related to its scope, and it intends to contribute to Theme Issues of journals published by the ISPRS or other journals related to the field.

Working Group Officers | ||

Chair | ||

| Ksenia Bittner German Aerospace Center (DLR) Earth Observation Center (EOC) Remote Sensing Technology Institute, Photogrammetry and Image Analysis Oberpfaffenhofen 82234 Weßling GERMANY +49 8153 28 4285

| |

Co-Chair | ||

| Franz Rottensteiner Institute of Photogrammetry and GeoInformation Leibniz University Hannover Nienburger Straße 1 30167 Hannover GERMANY +49 511 762 3893

| |

Co-Chair | ||

| Friedrich Fraundorfer Institute of Computer Graphics and Vision Graz University of Technology Inffeldgasse 16/II 8010 Graz AUSTRIA +43 316 873 5020

| |

Secretary | ||

| Max Mehltretter Institute of Photogrammetry and GeoInformation Leibniz University Hannover Nienburger Straße 1 30167 Hannover GERMANY +49 511 762 2981

| |

Supporters | ||

Supporter | ||

| Jinha Jung Lyles School of Civil Engineering Purdue University 550 Stadium Mall Drive West Lafayette, IN 47907-2051 USA +1 765 496 1267

| |

Supporter | ||

| Arpan Kusari University of Michigan Transportation Research Institute University of Michigan 2901 Baxter Road, Ann Arbor, MI 48109 USA +1 734 763 4806

| |

Supporter | ||

| Martin Weinmann Institute of Photogrammetry and Remote Sensing Karlsruhe Institute of Technology (KIT) Englerstraße 7 76131 Karlsruhe GERMANY +49 721 608 47302

| |

Terms of Reference

- Models and techniques for extracting features, geometrical primitives and objects from data acquired by airborne, spaceborne and/or terrestrial sensors, including object detection and 3D object reconstruction in complex scenes.

- Semantic interpretation of data of various origins and generated by various sensors, including methods for semantic segmentation and panoptic segmentation, potentially involving an interpretation of the entire scene, and considering both outdoor and indoor environments.

- Integration of semantic interpretation and 3D reconstruction of complex scenes, including point-based methods and methods based on object models, e.g. using implicit representations.

- Generation and update of high-resolution 3D city models and road databases, including mesh based, polyhedral, parametric and multi-scale representations possibly with level-of-detail (LOD) and (semantic) attributes, and texturing of the resultant 3D models.

- Object detection, recognition and 3D reconstruction in the context of robotics or autonomous driving.

- Multi-modal data fusion: performing any of the tasks mentioned above by exploiting the complementarity of using different viewpoints (space-borne, nadir/oblique aerial, UAV, fixed/mobile terrestrial), different sensor types (mono-scopic/stereoscopic images, LiDAR, (In)SAR), and existing data (traditional cartographic products, CAD models, urban GIS, data produced by crowd-sourcing).

- Methods addressing any of the tasks mentioned above, while focusing on handling noisy or out-of-distribution input data, including techniques for uncertainty estimation and uncertainty propagation.

WG II/3