The ISPRS International Contest of Individual Tree Crown (ITC) Segmentation

- Background

Canopies play an essential role in biophysical functions of forest environments. The presence and structure of canopies exert significant influences on temperature, vapor concentration, and radiation regime in forests. At an individual tree level, the position, the size, and the geometry of a canopy are important structural characteristics that decides its functions. Hence, individual tree delineation (ITD) from remote sensing (RS) data that provides the structural characteristics of the detected individual trees and their canopies has been becoming one of the most important topics in forest RS research during past two decades. The International Society of Photogrametry and Remote Sensing (ISPRS)’s international contest of individual tree crown (ITC) instance segmentation aims to clarify the state-of-the-art of the tree crown instance segmentation from high-resolution earth observation images through a benchmarking study of different methodological approaches.

Participants of the contest will use high-resolution earth observation images and corresponding annotation of individual tree crown masks provided by the organizer to develop their own models, thus, to automatically segment individual tree crowns from other high-resolution images. The ITC results provided by the participants will be evaluated and benchmarked by the organizer using standardized the procedures. The organizer will analyze the outcomes and report the findings to reveal the state of the art and to discover the challenges.

- Tasks

The tasks of the ITC segmentation contest include:

- to carry out ITC delineation/segmentation. The participants extract the masks for the crown areas of delineated individual trees using their own automated methods/models for the high-resolution aerial images provided by the organizer.

- to document the applied methods/models. The participants describe their applied methods/models, including the descriptionsand the figures for the pipeline of methods or the structure of model. If a method is not yet published, a description with sufficient details (e.g., in 1-2-page A4) is required to support the understanding of the organizer. If the method has been published, please provide the reference and to provide a short method description, e.g., in 1-page A4. All the descriptions will be used for the joint publication of the results. Additional clarifications may be required from the organizer during the evaluation process.

- to submit the final results, including the ITC delineation/segmentation results, and the method descriptions. Results without a sufficient documentation will not be considered in this contest for the prizes.

- Dataset

- Overview

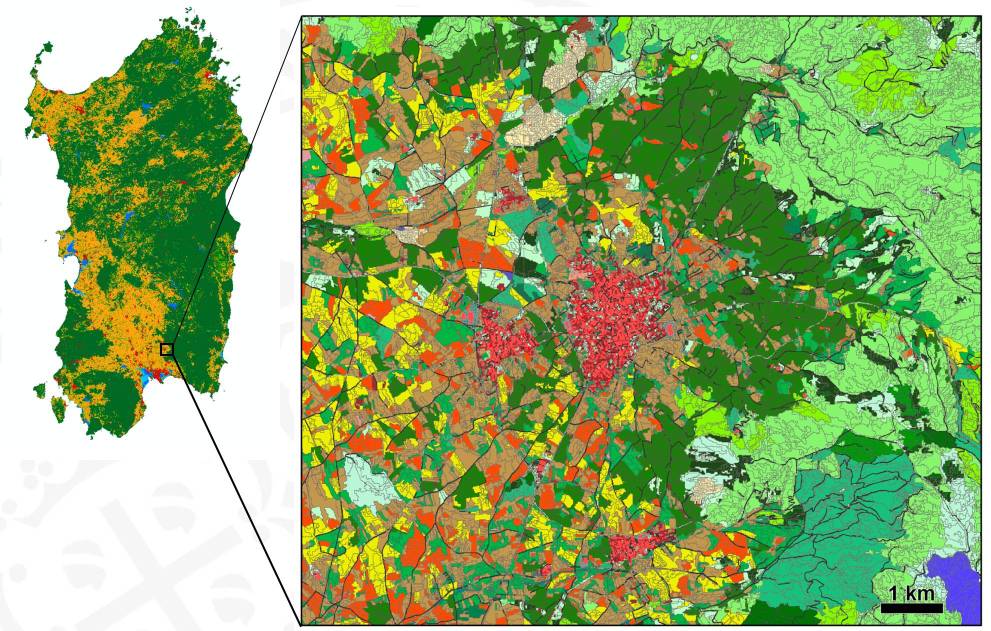

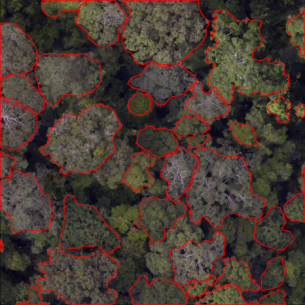

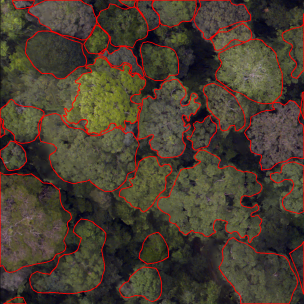

The training and testing data were collected at different locations from different sources around the world to represent various forest types and to include data from diverse sensors. The various types, conditions, and locations of forests, as well as diverse sensors were applied to clarify the algorithms’ performances under different conditions and to improve their transferability. Figure 1 represents two image examples overlaid with annotations.

Figure 1. The image with their corresponding annotation

Training data will be provided for model training and development, including the high-resolution image and corresponding annotation of individual tree crown mask.

- Data format

- Image Data: The images are in “.png” format, including bands Red, Green, and Blue.

- Annotation: The individual tree crown mask are stored in“.json” files, which are organized based on the MS COCO Format. The coordinates of the individual tree crown mask are in the image coordinate system, and are provided in the annotation file.

- Data composition

The datawas divided as training, validation, and testing sets. The training and validation set comprise aerial images collected from various areas around world. The training set will be constantly updated and enriched during the validation phase. The testing set includes images from the same scenarios as in the training set, as well as different scenarios from other areas to evaluate the transferability of applied methods.

- Data usage

Participants should not use the training data in this contest for publishing own results before the joint publication of this project has been published. The training data will be released after the joint publication as open data for non-commercial applications.

- Submission

The submitted prediction results should be stored in the “.json” format file following the MS COCO Format for evaluation.

- Results submission

There are two phases in this contest, the result submission in different phase varies:

Phase 1: Validation period

The training and validation data are released when the contest begins. Participants could submit their prediction results for the validation data for several times during the validation period, each of their submission will be evaluated and the evaluation results will be provided as feedback. Thus, the participants are enabled to improve their outcomes according to the feedback during the validation period. This phase is not compulsory for the participants.

Phase 2: Testing period

The testing data will be released by the last week before the deadline. That is, the participants will have one-week time to process the testing data using their approaches developed based on the validation data. The final ranking of this contest will be based on the results of the testing data. The results and the description of the applied methods should be submitted at the same time. The method description is required in a Microsoft word format file with the real names and afflictions of the authors of the method. Make sure the descriptions are clear for the organizer to understand. The latest submission of the testing results and methods description before the deadline will be applied for evaluations.

Phase 3: Winners determination

After the testing period, the top 6 teams will be required to submit a docker of their model for the organizers of the contest. The organizers will test the models and return the average precision of inference results based on the dockers from participants. The rank of the top 6 teams will be determined by the results generated by dockers.

- Supplementary material

Participants can provide supplementary materials if they wish. The supplementary materials are optional and will not necessarily be included in the final report and final publication.

- Evaluation

The organizers will evaluate the algorithm by comparing the submitted results with the ground truths. Identical evaluation approaches will be applied to the results of all participants.

- Strategy

Two aspects will be considered in the evaluation:

-

- The accuracy of ITC instance detection andshape delineation.

- The generalization and transferability of the model for multiple scenarios.

- Metrics

The predictions are considered as true positive if their overlap with ground truth satisfies certain IoU (Intersection of Union) threshold, e.g., 50% or 75%. The evaluation will include metrics, such as the precision, recall, AP50, AP75, etc.

- Joint publication

All participants providing decent results will be invited to be the co-author of a joint paper for this study, i.e., 1-2 co-author(s) per participant as provided by the participant.

- Schedule

- Preliminary opening (January 22nd)

- Contest opening (January 28nd, 2024): registration starts and the training and validation sets are released.

- Validation phase (January 28nd → June 14th, 2024): participants could submit validation results several times and check the real-time rank. The training set will be updated continuously during this phase.

- Testing phase (June15th → 21st, 2024): the testing set will be released on June 15th. The participants submit the testing results and method description.

- Deadline (June 21st, 2024): the deadline of final submission.

- Winner announcement (Before July 15th, 2024)

- Award ceremony (Nov, 2024): ISPRS Technical Commission III Mid-term Symposium “Beyond the canopy: technology and applications of remote sensing”, Belém, Pará, Brazil

The baseline time zone of each deadline is UTC/CMT +0. e.g., the deadline of this contest is June 22nd 00:00 in UTC/CMT +0, or June 22nd 8:00 in UTC/CMT +8 (Beijing), or June 22nd 1:00 in UTC/CMT +1 (Paris), or June 21st in UTC/CMT -8 (Los Angeles).

The contest organizers remain the rights to adjust the time table and progress. The timetable may be adjusted according to the progresses.

- Prize

Six teams will be selected as winners for following prizes:

- The 1st-ranked team will win the first-place prize. The 2nd-and 3rd- ranked teams will win the second-place prize. The 4th- to 6th-ranked teams will win the third-place prize.

- The prizes for first-place, second-place, and third-place will be $3k, $2k, and $1k.

- One team that makes a significant contribution for the contest will be awarded the contribution prize and $1k dollar.

- Contact

WGIII/1 Officers: Xinlian Liang, Maria Teresa Melis, Weishu Gong, Martin Mokroš

Please inform your participation to xinlian.liang@whu.edu.cn

- Acknowledgement

This contest is associated with the Remote Sensing Image Intelligent Processing Algorithm Contest (RSIPAC) as the international track.

latest updated on 28th Jan. 2024

WG III/1